It’s an odd question, yet determining size is the tacit assumption behind traditional data management efforts. That assumption exists because, traditionally, there has always been a structure built in rows and columns to store information. This is based on physical thinking.

because, traditionally, there has always been a structure built in rows and columns to store information. This is based on physical thinking.

Size matters when building physical things. Your bookshelf needs to be tall, wide and deep enough for your books. If the garage is too small, you won’t be able to fit your truck.

Rows and columns have been around since the early days of data processing, but Dan Bricklin brought this paradigm to the masses when he invented VisiCalc. His digital structure allowed us to perform operations on entire rows or columns of information. This is a very powerful concept. It allows a great deal of analysis to be done and great insight to be delivered. It is, however, still rooted in the same constraint as the book shelf or garage; how tall, wide, and deep must the structure be?

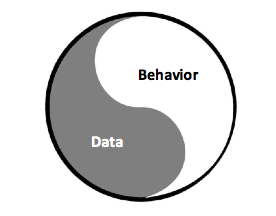

Semantic technology flips this constraint on its head by shifting away from structure and focusing on meaning.

Meaning, unlike books, has no physical size or dimension.

Meaning will have volume when we commit it to a storage system, but it remains shapeless just like water. There is no concept of having to organize water in a particular order or structure it within a vessel. It simply fills the available space.

At home, we use water in its raw form. It’s delivered to us through a system of pipes as a liquid, which is then managed according to its default molecular properties. When thirsty, we pour it into a glass. If we want a cold beverage, we freeze it in an ice cube tray. Heated into steam, it gives us the ability to make cappuccino.

We don’t have different storage or pipes to manage delivery in each of these forms; it is stored in reservoirs and towers and is delivered through a system of pipes as a liquid. Only after delivery do we begin to change it for our consumption patterns. Storage and consumption are disambiguated from one another.

Semantic technology treats meaning like water. Data is stored in a knowledge graph, in the form of triples, where it remains fluid. Only when we extract meaning do we change it from triples into a form to serve our consumption patterns. Semantics effectively disambiguates the storage and consumption concerns, freeing the data to be applied in many ways previously unavailable.

Meaning can still be extracted in rows and columns where the power of aggregate functions can be applied. It can also be extracted as a graph whose shape can be studied, manipulated, and applied to different kinds of complex problem solving. This is possible because semantic technology works at the molecular level preventing structure from being imposed prematurely.

Knowledge graphs are made up of globally unique information units (atoms) which are then combined into triples (molecules). Unlike water’s two elements, ontologies establish a large collection of elements from which the required set of triples (molecules) are created. Triples are comprised of a Subject, Predicate, and Object. Each triple is an assertion of some fact about the Subject. Triples in the knowledge graph are all independently floating around in a database affectionately known as a “bag of triples” because of its fluid nature.

Semantic technology stores meaning in a knowledge graph using Description Logics to formalize what our minds conceptualize. Water can be stored in many containers and still come out as water just like a knowledge graph can be distributed across multiple databases and still contain the same meaning. Data storage and data consumption are separate concerns that should be disambiguated from one another.

Semantic technology is here, robust and mature, and fully ready to take on enterprise data management. Rows and columns have taken us a long way, but they are getting a bit soggy.

It’s time to stop imposing artificial structure when storing our data and instead focus on meaning. Let’s make semantic technology our default approach to handling the data tsunami.

You may be wondering what some of our Data-Centric Conference panel topics will actually look like, what the discussion will entail. This article from Forbes is an interesting take on knowledge graphs and is just the kind of thing we’ll be discussing at the Data-Centric Conference.

You may be wondering what some of our Data-Centric Conference panel topics will actually look like, what the discussion will entail. This article from Forbes is an interesting take on knowledge graphs and is just the kind of thing we’ll be discussing at the Data-Centric Conference. moment, as far as we know, there isn’t a full stack data-centric architecture available to direct implementation. What this means is that early adopters will have to roll their own.

moment, as far as we know, there isn’t a full stack data-centric architecture available to direct implementation. What this means is that early adopters will have to roll their own. will need to do.

will need to do. software-as-a-service are increasingly well established. Open source software is popular and readily available, and custom app development has become fairly straightforward.

software-as-a-service are increasingly well established. Open source software is popular and readily available, and custom app development has become fairly straightforward.